Just before the Second World War, a Big Idea was detonated: the idea of the computer. The world has never been the same.

Princetonians played a key role. Here graduate student Alan Turing *38 finalized his landmark paper, “On Computable Numbers,” the light-bulb moment in which humankind first discovered the concept of stored-program computers. And here one of his professors, John von Neumann, would build just such a device after the war, MANIAC at the Institute for Advanced Study, forerunner of virtually every computer on the planet today.

Of course other people were involved, and other institutions — Penn had its pioneering wartime ENIAC machine — but Turing and von Neumann arguably were the two towering figures in launching computers into the world. As George Dyson claims in his new book on early computing at the Institute, Turing’s Cathedral, “the entire digital universe” can be traced to MANIAC, “the physical realization” of Turing’s dreams.

June 2012 marks the centennial of Turing’s birth in London, and universities around the world — including Princeton and Cambridge, where Turing did the research that led to his landmark paper — are celebrating with conferences, talks, and even races. Mathematicians are calling 2012 “Alan Turing Year.” Since the year also is the 60th anniversary of the public unveiling of MANIAC, this seems a particularly good time to recall the part Princeton played in the birth of all things digital.

Turing was a 22-year-old math prodigy teaching at Cambridge when, in spring 1935, he decided to further his studies by coming to Princeton. He just had met the University’s von Neumann, a Hungarian-émigré mathematician then visiting England. By the time he encountered Turing, von Neumann was the world-famous author of 52 papers, though only 31 years old.

Turing’s specialty was the rarified world of mathematical logic, and he wanted to study near top expert Alonzo Church ’24 *27, another Princeton professor. The legendary Kurt Gödel was here, too, although nervous breakdowns made him frequently absent. All these geniuses had offices in Fine Hall (today’s Jones Hall), where the Institute for Advanced Study temporarily shared quarters with the University’s renowned math department.

Turing arrived in Princeton in October 1936, moving into 183 Graduate College and living alongside several of his fellow countrymen — enough for a “British Empire” versus “Revolting Colonies” softball game. A star runner, Turing enjoyed playing squash and field hockey and canoeing on Stony Brook. Still, Turing made few close friends. He was shy and awkward, with halting speech that has been imitated by actor Derek Jacobi in the biopic movie Breaking the Code. Being homosexual, Turing felt like an outsider.

Soon the postman delivered proofs of Turing’s article for a London scientific journal. The young author made corrections, then mailed it back: “On Computable Numbers,” surely one of the epic papers in history.

Princeton likes to take some credit — in 2008, a PAW-convened panel of professors named him Princeton’s second-most influential alum, after only James Madison 1771 — but Turing actually wrote the paper at Cambridge. It dealt with mathematical problems similar to those on which Church was working independently. In fact, Church published a paper that took a different approach several months before Turing’s came out — but Turing’s paper contained a great novelty. As he lay in a meadow, he had a brainstorm. He proposed solving math problems with a hypothetical machine that runs an interminable strip of paper tape back and forth — writing and erasing the numbers zero and one on the paper and thereby undertaking calculations in binary form. The machine was to be controlled by coded instructions punched on the tape.

Previous machines throughout history were capable of performing only one assigned task; they were designed with some fixed and definite job in mind. By contrast, an operator could endlessly vary the functioning of a hypothetical “Turing machine” by punching in new coded instructions, instead of building an entirely new device. Here was a “universal computing machine” that would do anything it was programmed to do; curiously, the machine proper remained untouched. Thus Turing’s genius lay in formulating the distinction we would describe as hardware (the machine) versus software (the tape with binary digits).

He never actually built such a machine — for Turing, it remained an intellectual construct — nor explained exactly how the tape would have worked. Nonetheless, his idea was profoundly important. Here begins, in theoretical principles at least, the digital universe as distinct from the old analog one. “The entire Internet,” Dyson writes, “can be viewed as a finite if ever-expanding tape shared by a growing population of Turing machines.” The idea led, a decade later, to the construction of an actual stored-program computer — but not until the paper tape of theory was replaced by lightning-fast electrical impulses.

Church praised his student’s paper and popularized the label “Turing machine.” But Turing kept a certain distance from his professors, with Church recalling years later that he “had the reputation of being a loner and rather odd,” even by rarified Fine Hall standards. When Turing presented “On Computable Numbers” in a lecture to the Math Club in December 1936, attendance was sparse, much to his disappointment. “One should have a reputation [already] if one hopes to be listened to,” Turing wrote to his mother glumly.

Little could this lonely and dejected Englishman have imagined that one day he would be considered among the most important graduates in the University’s history — so important has “On Computable Numbers” proven to us all.

Von Neumann later would make history by adapting Turing’s ideas to the construction of a physical computer, but the two men formed no particular friendship, despite the proximity of their offices. In personality they were virtually opposites. Von Neumann warmly embraced his adopted country, styling himself as “Johnny”; the reticent Turing never fit in and was shocked by coarse American manners, as when a laundry-van driver once draped his arm around him and started to chat. Von Neumann bought a new Cadillac every year, parking it splendidly in front of Palmer Lab; Turing had difficulty learning to drive a used Ford and nearly backed it into Lake Carnegie. The outgoing von Neumann always wore a business suit, initially to look older than his tender years; the morose Turing looked shambolic in a threadbare sports coat.

Despite their differences, von Neumann recognized Turing’s brilliance and tried to entice him to stay as his assistant for $1,500 a year after his second year of study was complete. But Turing’s eyes were on war clouds. “I hope Hitler will not have invaded England before I come back,” he wrote to a friend.

Already looking ahead to military code-breaking, Turing sought some practical experience with machines. A physicist friend loaned him his key to the graduate-student machine shop in Palmer Lab and taught him to use lathe, drill, and press. Here Turing built a small electric multiplier, its relays mounted on a breadboard — a foretaste of the complex machines he soon would use back home to crack Nazi codes.

In May 1938 he defended his Ph.D. dissertation, “Systems of Logic Based on Ordinals” — a paper unrelated to computers but still, according to one historian, “a profound work of first-rank importance” in advancing mathematical logic. Then he sailed home to England, which soon declared war on belligerent Germany.

Turing worked at top-secret Bletchley Park, where a team built 10 huge electronic digital computers, called Colossus. Turing did not design these, but he recognized them as signposts pointing to the digital future — though they had no stored program. Each Colossus inhaled paper tape at a stunning 30 miles an hour, processing 63 million characters in total before the collapse of the Third Reich.

Turing ought to have become a national hero for his ingenious code-breaking at Bletchley Park — historians say it helped to shorten the war by as much as two years — but the existence of Colossus remained a secret for decades.

War changed the future for von Neumann, too. Famed for his contributions to pure math, he now was transformed, paradoxically, into the most practical of applied scientists. Consulting for the U.S. Army Ordnance Department even before fighting began, he studied the complex behavior of blast waves produced by the detonation of high explosives. Eventually he was helping to build an atomic bomb.

Since no atom bomb ever had been attempted, scientists needed to model how one might work. This required innumerable calculations. At Los Alamos, roomfuls of clerks tapped on desk calculators and shuffled millions of IBM punch cards. After two weeks there, in spring 1944, von Neumann was dismayed by the slow progress. What was needed was computation at electronic speeds.

Such swiftness was promised by ENIAC (Electronic Numerical Integrator and Computer), a project to build an all-digital, all-electronic device to calculate shell trajectories for Army Ordnance at Penn. Von Neumann watched its progress with fascination but dreamed of something even more advanced: a true stored-program computer, a Turing machine.

To get ENIAC to change tasks, its handlers had to reset it manually by flipping switches and unplugging thousands of tangled cables. It could take days to rearrange its hardware for a problem that then took just minutes to compute. Inspired by Turing — whose “On Computable Numbers” he constantly recommended to colleagues — von Neumann began to conceptualize the design of a computer controlled by coded instructions stored internally.

To usher in the brave new world of Turing machines, von Neumann audaciously proposed that the Institute for Advanced Study build one itself, on its new campus beyond the Graduate College. He envisioned an “all-purpose, automatic, electronic computing machine” with stored programs: “I propose to store everything that has to be remembered by the machine, in these memory organs,” he wrote, including “the coded, logical instructions which define the problem and control the functioning of the machine.” This describes the modern computer exactly.

Von Neumann’s suggestion of building some kind of mechanical apparatus on the Institute grounds was greeted with dismay by many of the aloof intellectuals there, horrified by the thought of greasy mechanics with soldering guns. Nearby homeowners complained about potential noise and nuisance. But the Electronic Computer Project went ahead anyway, starting in November 1945 with ample funding from the military, plus additional contributions from the University and other sources. Young engineers were lured with a promise of free enrollment as Princeton Ph.D. students.

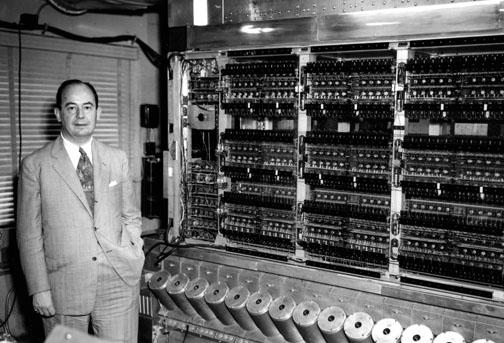

Called MANIAC, for Mathematical Analyzer, Numerical Integrator, and Computer, it was meant to improve in every way upon ENIAC. The Penn machine had 17,500 vacuum tubes, each prone to fizzling; the Institute’s, only 2,600. ENIAC was 100 feet long and weighed 30 tons; MANIAC was a single 6-foot-high, 8-foot-long unit weighing 1,000 pounds. Most crucially, MANIAC stored programs, something ENIAC’s creators had pondered but not attempted.

Assembly of the computer — from wartime surplus parts — began in the basement of Fuld Hall at the Institute; in early 1947, the project moved to a low, red-brick building nearby, paid for by the Atomic Energy Commission (the building now houses a day-care center). Not for six years would MANIAC be fully operational. The design choices von Neumann and his team made in the first few months reverberate to this day.

For example, they chose to use Turing’s binary system (0s and 1s) instead of a decimal system, and collaborator John Tukey *39, a Princeton professor, coined the term “bit.” So vast was their influence, the internal arrangement of today’s computers is termed the von Neumann architecture.

Von Neumann wanted MANIAC to jump-start a computer revolution, transforming science by solving old, impossible problems at electronic speeds. To maximize its impact upon the world, he eschewed any patent claims and published detailed reports about its progress. “Few technical documents,” Dyson writes, “have had as great an impact, in the long run.”

Seventeen stored-program computers across the planet soon were built following its specifications, including the identically named MANIAC at Los Alamos and the first commercially available IBM machine.

Controlled mysteriously from inside instead of outside, MANIAC seemed to many observers uncannily like an electronic brain. The great breakthrough was the set of 40 cylinders that surrounded its base like a litter of piglets. In an ingenious technical achievement, these cathode-ray tubes (similar to those coming into use for television) provided the world’s first substantial random-access memory.

One could lean over and literally watch the 1,024 bits of memory flickering on a phosphorescent screen on top of each tube, which Dyson calls the genesis of the whole digital universe. Such tubes had been perfected at Manchester University, England, where Turing was a consultant.

“The fundamental conception is owing to Turing,” von Neumann said of MANIAC. A decade earlier, the young Brit had proposed a tape crawling by with numbers on it; now MANIAC flashed at incredible speed the electronic equivalent of zeros and ones in glowing phosphor.

By our standards, MANIAC may seem a modest achievement: As Dyson notes, the computer’s entire storage (five kilobytes) equals less memory than is required by a single icon on your laptop today. No one yet had invented a modern programming language; just to do the equivalent of hitting the backspace key, science writer Ed Regis says, meant precisely coding in something like 1110101.

And the computer broke down frequently — all 40 memory tubes had to be working perfectly at once. “The sensitivity of the memory, that was a big problem,” recalls UCLA professor emeritus Gerald Estrin, who was hired by von Neumann in 1950 to design the input-output device, a paper-tape reader. “If there was a storm with lightning, you would feel it in loss of bits. We spent many nights on the floor trying to tune it up.”

One of the last survivors of the team, Estrin, now 90, still can recall the roar of the big air-conditioners that labored to keep MANIAC’s vacuum tubes cool, the clammy chill of the room that helped ensure, incidentally, that weary computer operators never dozed.

Von Neumann “was obviously very smart,” Estrin remembers. “The questions he asked. ... He was always calculating the results in his head and predicting your answers before you said them.” Once Estrin impressed even the master, however. “I got a call late at night. Von Neumann and others were there with the computer, and something wasn’t working. As I walked over from my home, I remembered I had flipped a switch and not put it back. So when I got there, I just went over and flipped the switch. They were flabbergasted. I looked like a genius!”

To put MANIAC to work, von Neumann sought projects that demanded electronic speed — problems that otherwise might have taken years to compute. For example, meteorology: to predict the weather across half the United States 24 hours in advance required 40,750,000 calculations, obviously impractical for a clerk at a desk calculator. On a public tour in 1952, a Daily Princetonian reporter marveled at how MANIAC could produce in 10 minutes a forecast that would have taken a person 192 days and nights of continuous labor. Von Neumann, it seemed, had outwitted the weather gods for the first time in human history.

MANIAC was a true “universal machine” in the Turing sense: It could do many tasks without any reconfiguration of its physical parts. Only the stored program need change. So it modeled the roiling gases in the interior of stars for University astronomers (see “The Stargazers,” PAW, Sept. 22, 2010), and for historians, calculated the position of planets in the sky back to 600 B.C.

But these clever investigations were far less urgent than its military tasks, which were kept so secret that Estrin and many others didn’t learn of them for decades. With the Soviet Union racing to build a hydrogen weapon, von Neumann was determined to use MANIAC to meet the threat. He advocated the development of a huge bomb that could be dropped on the Russians pre-emptively, if necessary.

In a single calculation that ran for 60 days and nights in 1951 (cloaked as “pure math”), MANIAC proved the feasibility of a hydrogen device. Months later, the first thermonuclear bomb, “Ivy Mike,” was detonated in the Pacific, producing a fireball three miles across — 30 times bigger than Hiroshima’s.

Meanwhile, in England, von Neumann’s former student was busy designing stored-memory computers of his own, but that country could not compete with rapid U.S. developments. Von Neumann invited Turing to visit the Institute in January 1947, as MANIAC was being assembled. “The Princeton group seem to me to be much the most clearheaded and farsighted of these American organizations,” Turing wrote, “and I shall try to keep in touch with them.”

But Turing was fated to accomplish little more, owing to his arrest by British police for homosexual activities (“gross indecency”), which derailed his career. In lieu of prison, he was given shots of female hormones to reduce his libido. In 1954, at age 41, he died of cyanide poisoning, believed to be suicide. A half-eaten apple sat by his bedside. For gay activists, Turing is a martyr to homophobia, and they and others successfully pushed for an official apology by Prime Minister Gordon Brown in 2009.

Three years after Turing’s death, von Neumann died of bone cancer at 53. Princeton briefly took over the operation of MANIAC before donating it as an artifact to the Smithsonian about 1960. By then, there were 6,000 computers in the United States, nearly all using the von Neumann architecture, and the digital revolution was galloping ahead.

Neither of these two great pioneers lived to see the full explosion of the Turing Machine — for example, how transistors and chips shrunk computers so fast that, by 1969, the Apollo Guidance Computer could fit in a cramped spaceship, making possible a landing on the moon.

Today, personal computers alone number more than a billion worldwide — a far cry from the long-ago prediction, which Estrin remembers well, that 15 machines would suffice for the whole planet. Von Neumann Hall on the eastern edge of the Princeton campus honors the remarkable contributions of that vigorous, enthusiastic, and far-seeing man. And this year, as part of Turing Year celebrations, the former Princeton graduate student’s face will appear on a British postage stamp.

W. Barksdale Maynard ’88 is the author of Princeton: America’s Campus, an illustrated history of the University and its architecture, due in May from Penn State Press.