As a Ph.D. student in biochemistry who spent hours in the lab doing research, Angela Creager frequently used radioisotopes in her work, but didn’t think much about them. They were “part of the taken-for-granted repertoire of the lab,” she says. Creager ultimately decided to become a history professor specializing in scientific subjects. In Life Atomic: A History of Radioisotopes in Science and Medicine (The University of Chicago Press), Creager traces the numerous ways that radioisotopes have been critical to research and medicine.

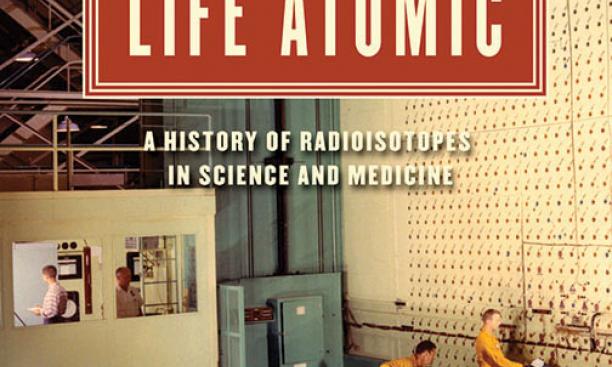

Radioisotopes — unstable versions of elements that emit radiation — have been a tool for biochemists since the 1930s, but really gained a foothold after World War II, when the government’s nuclear reactors were able to produce them on a far greater scale than could be produced in the lab. And the government was eager to promote their use. As Creager writes, the U.S. Atomic Energy Commission (AEC) saw radioisotopes in civilian research and medical practice as a way to de-link atomic energy from war in the mind of the public. The AEC set up a chemical-processing facility next to its reactor in Oak Ridge, Tenn., to produce and purify radioisotopes for lab use, and offered them at a subsidized price.

Radioisotopes changed research and medicine by making it possible to track biological processes. In the lab, they were used as tracers: The radiation they emit creates a signature “tag” that permitted scientists to track the movements of molecules through processes such as photosynthesis and DNA replication. On the clinical front, they originally were envisioned as a silver bullet to cure cancer. That didn’t happen — though they now are used to treat some thyroid disorders — and their contribution to nuclear medicine eventually included positron emission tomography (PET) scans, which are used to track cancer’s spread, and single photon emission computed tomography (SPECT) scans, which can diagnose heart disease.

As the use of radioisotopes grew, so did concern about the hazards of radiation. “By the 1960s and 1970s, fears of radioactivity shifted from explosions — and the resulting acute burns — to long-term effects,” such as leukemia, from low levels of exposure, says Creager.

The modern understanding of radioisotopes’ potential hazards hasn’t eliminated their use. While their role in the research setting has been replaced over the last decade or so — DNA sequencing, for example, is now done with fluorescent tags — it’s been much tougher to find substitutes in medical practice. A few years ago, there was a shortage of the most commonly used radioisotope in medicine after several reactors went offline at the same time. That forced delays in tests and a scramble for substitutes, demonstrating how much we still rely on an icon of peaceful atomic-energy use.