At 12, Tom Griffiths was slaying dragons, a task not so different, as it happens, from his current role as a professor of psychology and computer science at Princeton. That’s not to say his colleagues or — heaven forbid — his students behave like dragons. Rather, the role-playing games that engaged him in his youth examined human decision-making in ways that he does in his research now.

The games from his childhood consisted of descriptions of settings and events. “You are in a dark cave,” a player would be told, and so on. A player might attempt an action, like climbing a rope to escape; the roll of a die would determine if it was successful. Early in his game-playing career, Griffiths started calculating the probabilities of sequences of events to decide on the right plan.

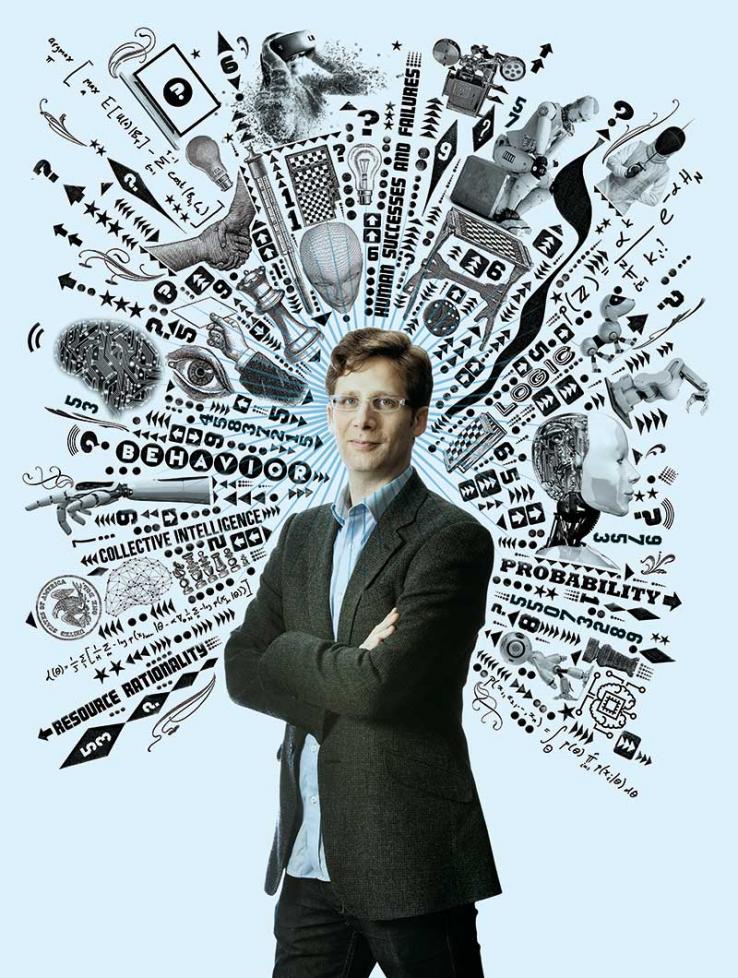

“One of the things that I find fascinating about those kinds of games is that what they’re trying to do is describe human lives in terms of a computational problem,” Griffiths says. “They’re trying to put a probability on every kind of human action or interaction. It’s exactly the thing that I do now, which is thinking about how to specify complex models for the kinds of events that happen in human lives.” Even if we don’t fight dragons or search for treasures, we fight illness and search for jobs. And we run rough simulations in our heads of how things might work out, one or many steps ahead. Griffiths aims to create, with computers, approximations of those mental processes, to better understand how and why people make decisions — and enable people to make better ones.

If you want to build a computer that can think like a person, psychological theories might be missing some important details. “In the past, theories have been much more qualitative, verbal,” says Jonathan Cohen, co-director of the Princeton Neuroscience Institute. “From one perspective, that’s appealing. It’s the kind of thing that’s much easier to explain to broad audiences. But they lack the precision that one expects of a rigorous scientific theory.”

Computer science provides the tools to study people’s psychology rigorously and in new ways — and Griffiths has been pushing the envelope, dramatically advancing the field of psychology, according to Cohen. Theories about cognition — learning, perceiving, deciding — are specified in computer code and produce quantitative predictions about how people will actually behave. “Increasingly, we have the opportunity to be much more precise about what we think are the mechanisms that underlie human cognition,” Cohen says.

Griffiths has long wanted to understand how people think. After all, he says, “if you want to make intelligent machines, human beings are still the standard that we have for defining intelligence.” Artificial intelligence (AI) — computers’ ability to mimic human intelligence and learn to play chess or drive a car, for example — still makes a lot of dumb mistakes. But it’s not just the quest for better AI that drives Griffiths — it’s his need to explore “one of the greatest scientific mysteries that we have, which is how human minds work.”

For years, economists treated human decision-making as highly logical. Then so-called behavioral economists found that we consistently make certain errors in our reasoning, suggesting that we might not be so rational after all. Griffiths has taken up a third flag, using mathematical models to quantify effort, efficiency, and accuracy in people’s thinking. In terms of deploying our cerebral effort efficiently, he argues, we’re actually highly rational.

Let’s say someone asks you to predict how much money a movie would make, without telling you anything about the film. You might consult your memory of various box-office numbers and estimate: a few million dollars. Now you’re told that the movie has already made $100 million. Ah, we’re in blockbuster territory. You update your estimate accordingly. With each estimate, you’ve performed a sophisticated statistical calculation.

Griffiths’ earliest work as a graduate student, at Stanford, drew on the work of the 18th–century statistician Thomas Bayes, which involves updating prior estimates about the probability of an event or explanation based on incoming information: If a movie has made $100 million, what are the chances that it will make $200 million, or that it stars Will Smith? Griffiths and his Ph.D. adviser, Joshua Tenenbaum, helped pioneer the use of Bayesian methods to model cognition. It turns out that we’re natural-born statisticians, at least for some problems: Bayesian probabilities often predict the kinds of inferences people actually make about the world. Asked about the movie grosses, for example, people provided off-the-cuff guesses that closely matched what a stats geek would have calculated based on historical distributions of movie earnings. Our experience bakes in those distributions, and we reason with them on the fly. Whenever you decide whether to keep waiting for a bus, you might not be scribbling Bayes’ equation on a notepad, but the result wouldn’t be much different.

What accounts for people doing such a good job? Griffiths attributes it to what he calls “resource rationality.” To understand what he means, I find myself sitting in front of a computer in the office of Fred Callaway, one of his Ph.D. students. I’m controlling a spider navigating a web on the computer screen. The web branches out from the center, and I must select the best path to the edge. Each step earns or costs me money (real money if I’m a real participant). I can spend a bit to click on any or all of the steps to reveal their costs or payoffs before I make my trek. The trick is not just to choose the highest-paying path, but to sample information efficiently, considering the real costs and potential benefits of each bit of information.

Callaway likens the problem to chess — and to planning more generally. If you think long enough, theoretically you could make the absolute best chess move, the most rational decision. But that could take a very long time. While we can’t always make perfectly rational decisions, we can at least be rational about how we make decisions: how we deploy our resources — our time and brainpower — when thinking. That’s resource rationality. Callaway defines it this way: “Given that I just have this little hunk of meat up here” — he gestures at his head — “what are the best moves for me to think about?” He and his collaborators are finding that participants are very good at deciding when to stop surveying the spider web and just set out, coming close to the decisions of a computer with many hours of processing time. Even if we do the wrong thing, we do it for the right reasons, given the resources we have.

“Resource rationality helps to explain human successes and failures,” Griffiths says. “We’re good at predicting movie grosses because it’s a problem that can be solved using an efficient strategy — basically, remembering things we have heard about movies. But we make plenty of mistakes in other settings, where efficiency and accuracy may be at odds with one another.”

Living up to computers often involves thinking less, not more.

Griffiths studies not only how people make decisions as individuals, but also how they do when embedded in a social network, and how groups as a whole behave. In the office of postdoc Bill Thompson, I play a computerized game that requires me to improve on an arrowhead design provided by another participant, then pass it to yet another participant. It’s an early test of new software designed by Griffiths and collaborators with funding from the federal Defense Advanced Research Projects Agency (DARPA).

The new platform allows researchers to recruit thousands of participants online and organize them into social networks so that the information passed between them — like the arrowhead design — can be controlled and observed. “It’s never been possible before to actually simulate complex cultural processes in the lab using real people,” Thompson says. The researchers are creating microcosms of the wider information ecosystem — the passage of news, gossip, advice, and insight between people in person or through social and mass media. They hope to study processes as complex as election interference or social-media silos and political polarization. If you can closely observe how people share small bits of information in a controlled environment, you might later be able to predict the spread of fake news on Facebook.

Another experiment explores collective intelligence. Participants must sort a series of cards in order in a short amount of time. Most people fail, but the next group of people gets to watch the best performer from the previous group, and so on. Eventually people discover better and better strategies; in the end, people do very well. Through this and similar experiments, the lab wants to see when populations as a whole can do things that are smart even when individuals can’t.

“Our goal is to be able to simulate the processes of interaction between people that support group decision-making and cultural evolution,” Griffiths says. Cultural evolution describes the way ideas are shared and refined over time in a manner similar to the evolution of species. “One way of thinking about this is from the perspective of people having limited computational resources”— finite brainpower. “If we want to achieve certain goals, such as developing new technologies or making scientific discoveries, we have to pool those computational resources and work together. The ability to run large-scale crowdsourced experiments where people interact with one another allows us to analyze the processes by which groups of people are able to act intelligently.”

Griffiths grew up in Western Australia and began programming at age 8, when his father brought home an early Epson portable computer. He commandeered it and wrote games in BASIC. At 13, he developed a chronic illness and spent two years doing his schoolwork from home. He also spent more time playing his role-playing games online, a couple hours a day. Eventually he’d earned enough points to become what’s called a “wizard,” which allowed him to manipulate the world others inhabited, further honing his programming skills — and foreshadowing a career in experimental psychology.

Just as Griffiths wielded imaginary swords in role-playing games, he also practiced with real ones; he has fenced since he was 12. “There are interesting computational problems involved in fencing,” he says. One must decide, for example, when it’s possible to parry to block another blade. Fencing has a strategic element, Griffiths says, “but in the moment, you are completely liberated from deliberation — a very extreme version of resource rationality.” As a student in the history of fencing, he has thought about the deconstruction of complex moves into easily teachable component parts, so that individual moves and the way they’re chained together are quick and easy to learn and execute. “I’m totally fascinated by this but stopped putting theory into practice after I messed up the math and a longsword broke my right wrist,” he says.

As an undergraduate at the University of Western Australia, Griffiths sought “genuine mysteries” and majored in psychology, with additional classes in philosophy, anthropology, and ancient history. The summer after his sophomore year, he encountered a book chapter on artificial neural networks, algorithms that roughly mimic the architecture of the brain in order to learn from data. “I was like, ‘Wait a minute. You can use math to make models of how human minds work?’” he recalls. “I got incredibly excited about that.” He brushed up on his calculus and linear algebra and joined a lab doing computational modeling research.

Applying to grad schools, he found, on Stanford’s web page, two scholars he hoped to work with. It turned out they had already retired, but Tenenbaum, about to start as a professor there, pulled Griffiths’ application from the pile. “We clicked when we first met,” Tenenbaum recalls. “The first word that I was going to use was just that it was a lot of fun, talking to him, really really fun. I was more senior, but it felt like talking to a peer or a colleague.” Griffiths gave Tenenbaum a handmade set of magnetic poetry with terminology from probability theory that they’d been scrawling on chalkboards, a set Tenenbaum still has. “It was both really sweet and really creative and something only he would have come up with or done.”

At Stanford, Griffiths shared several math classes with a fellow cognitive-science student named Tania Lombrozo. Here he makes a linear algebra joke: “We discovered one another’s singular values.” They married, and in 2006 both started teaching psychology at Berkeley, before coming in 2018 to Princeton, where Lombrozo is also a psychology professor.

Griffiths says that studying both psychology and computer science allows him to “go to a talk in psychology and come away from it wondering, ‘Why is it that people do that thing?’” and then hear about a technical idea at a computer science talk that can answer the question. “So what I do,” he says, “is spend a lot of time just collecting mysteries on the one hand and solutions on the other hand, then hopefully connecting those things up.”

“I think the thing that’s most striking about Tom is he’s got these very strong mathematical chops, but he’s also sort of a humanist,” says collaborator Alison Gopnik, at Berkeley. “He’s interested in theories of human thinking, but also in how they can help us think in our everyday lives.”

I played one version of the spider game in Callaway’s office that provided feedback on whether I was making the right move or collecting the right information. Such tools could give people insight into whether they waste too much time exploring options, or whether they act too impulsively and fail to consider long-term benefits. “Just telling me that about myself,” Callaway says, “that’s giving me a tool that I can use for self-reflecting.” Another project explores “cognitive prosthetics,” software “assistant” tools (remember Clippy, Microsoft’s animated paper assistant?) that nudge people toward the best decisions. A system designed by Griffiths’ lab turns to-do lists into a kind of game by awarding certain numbers of points for tasks, helping people reduce procrastination and prioritize the most important undertakings. In some ways it knows us — and what will make us happy down the road — better than we do in the moment.

In her second year as a Ph.D. student under Griffiths, Anna Rafferty, now a computer scientist at Carleton College, proposed shifting her focus from language to education. Griffiths “was really encouraging,” she recalls. “He got that one of the things driving me was that I wanted to do something that would have a practical positive impact on society, besides doing cool research.” Together they’ve explored the best way to sequence lessons and tests, and how to infer the thinking that leads to a student’s mistakes. Rafferty and Griffiths have launched a free website, Emmy’s Workshop, offering a personalized software tutor for learning algebra.

Some of Griffiths’ insights about computer science and thinking are included in his 2016 book, Algorithms to Live By, written with the journalist Brian Christian. The book describes classical computer science methods — specifically how programmers get machines with limited speed and capacity to find good-enough solutions to ginormous problems — and what they might teach the rest of us, who also have limited speed and capacity.

Readers might be relieved to find that living up to computers often involves thinking less, not more. For instance, we sometimes overestimate how much we should explore our options before acting. The book also discourages readers from attempting to multitask. When computers tackle too much at once, they load one task’s data, but before they have a chance to do anything with it they have to replace it with another task’s data, leading to a wheel-spinning phenomenon called thrashing. It’s basically what we do when we return attention to a project and have to remind ourselves where we were. “That really helps me understand why multitasking is problematic in a way that I never fully understood before,” Callaway says.

And the book addresses creativity. Instead of knocking your head against the wall trying to improve on an idea, add some randomness. Just as algorithms sometimes get stuck in dead ends and need resets, a writing project might benefit from your taking a break and clicking the “random article” link on Wikipedia. In the social realm, the authors discuss game theory, or strategies for competition, cooperation, and coordination. Just as algorithms that trade stocks or bid in online auctions can go haywire, so can human markets in which prices are based on what everyone thinks everyone else thinks something is worth. The authors advise, “Seek out games where honesty is the dominant strategy. Then just be yourself.” (Griffiths says “games” refers to any interaction — a friendship, say.)

The advice to solve some problems by relaxing certain constraints has social implications, as well: If you’re planning wedding tables, for example, you might find some surprisingly good arrangements by not assuming certain parties need to sit together. One engineer Griffiths spoke with had a breakthrough when she removed her parents from the head table.

Griffiths says readers have shown appreciation for the advice in his book: “I have received grateful emails from people who now have a better handle on their schedules, feel more relaxed about leaving things a little messy, and are thinking about using algorithms for wedding planning.” Studying humans to build AI to help humans: It can do more than teach us algebra. It might avoid wedding drama, too.

Matthew Hutson is a freelance writer who specializes in science.