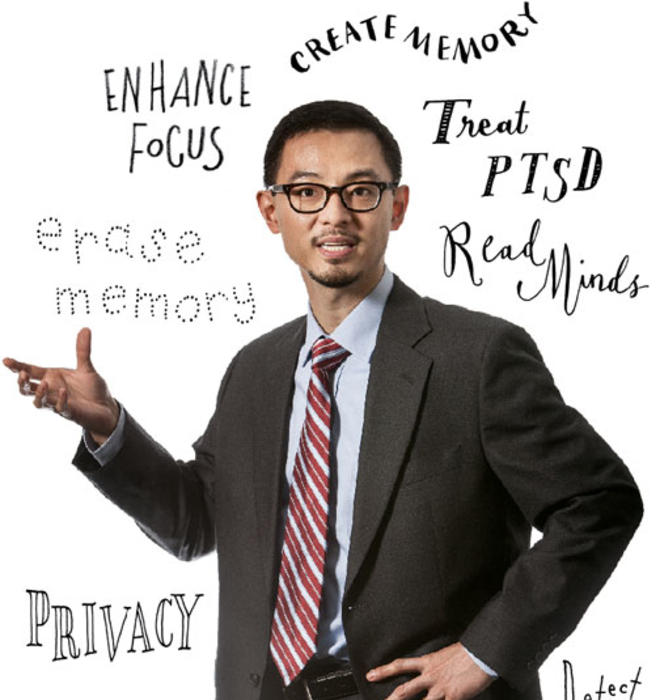

AS SCIENTISTS at Princeton and elsewhere make strides in understanding the brain, Matthew Liao ’94 works to understand where they may lead us. Liao, director of the master’s program in bioethics at New York University, is a philosopher and neuroethicist. Scholars of neuroethics, a new discipline, focus largely on two areas: what happens in our brains when we make ethical decisions, and the ethics of neuroscience technologies. PAW spoke with Liao about that second aspect of his work.

Why neuroethics?

I got interested in this area because a couple of my colleagues were taking things that enhance your cognition — drugs like Ritalin and modafinil. There was a survey in Nature that said something like one in five Nature readers were on cognitive enhancement. And we’re not talking about coffee. Modafinil — it’s a drug that the U.S. government has been interested in. It basically allows you to go for a long period without sleep. The government wants pilots to be able to go on missions for, like, 18 hours without feeling tired. The drug is used for narcolepsy, but they’ve found that it can also help normal subjects function really well without sleep.

There are also mood enhancers — things that make you feel better, like Prozac. There are people taking an antidepressant for depression. But, some people take it to make themselves feel happier — better than well, even if they are not clinically depressed. And so that raises issues about authenticity: Do we change ourselves, or our perception of ourselves, when we take drugs like that? We’re altering our personality — what does that mean? And then it gets into issues about our perceptions of the world — does it affect the way you see the truth, the way you perceive things? Maybe you’re sad because things are really bad! But this will make you think, no, everything is fine! And then you don’t have to do anything about it. It can affect your responses.

What are some of the other issues you’re looking at?

One of the things I wrote about is memory modification. A lot of soldiers are coming back from war with post-traumatic stress disorder, PTSD. There’s a drug called propranolol; it’s a beta-blocker that they give to soldiers with PTSD to dampen the emotional memory. So that memory doesn’t get consolidated as strongly into long-term memory. It works to block the process of consolidation, when the memory moves from short-term to long-term memory.

There’s another drug, ZIP, which stands for zeta inhibitory peptide, which affects reconsolidation of memory. It can erase particular memories. Reconsolidation means that every time we try to recall something, certain proteins have to come together, and they put together that memory again. It’s not like a computer memory, where once you have it, it’s right there and you can access it each time. Reconsolidation puts a memory back together and updates it, based on your current way of thinking. So in that way, the memory is always changing.

Is that how you can make yourself believe things that didn’t happen in a certain way?

If you keep thinking about it, you can change the memory, and it will evolve. You’ll remember the big things, or misremember the big things, and then leave out the details.

And that’s how you forget the pain of childbirth, right?

That’s right! You remember all the great things, and you forget the pain. That’s exactly what happens.

How does it work?

The theory is that the protein kinase M zeta, which is called PKMzeta, is often needed for the storing of long-term memories. But when ZIP is used, it inhibits PKMzeta and blocks the memory from being reconsolidated. And if you do that, the memory stops existing completely. There was an experiment with mice where scientists first taught mice a memory — that they would be shocked each time they went into a certain zone. Some mice would be given ZIP to inhibit the PKMzeta. There were also control mice that did not get ZIP. A day later, the control mice would avoid the shock zone. But the mice given the ZIP would keep going back and getting shocked.

You can target a particular memory and erase it. The scientists also teach the mice a maze, in addition to the shock zone. They try to trigger the memory of the shock zone to see if they can erase it. And then they see if the mice also forgot the maze. If all the mice can run the maze, you can infer that the drug hasn’t erased the maze memory. What you don’t want is a drug that erases everything.

The theory is that because the drug targets reconsolidation, it only targets memory that you’re trying to recall. So one danger is: Suppose you’re trying to recall another memory while you’re recalling this memory. You might forget that one, too.

How might this be used in humans?

Well, one application is addiction. The old theory of addiction is that once people get addicted, there are certain triggers, so you try to get addicts to avoid the triggers. With this, the idea is to try to recall the triggers. You might purposefully try to trigger the triggers. And then at that point, erase the trigger. That set of memories gets erased.

You could use it to erase memory of a very traumatic event. And some people may also think about using the technology on the enhancement side. DARPA [the military’s Defense Advanced Research Projects Agency] is really interested in repairing memories and restoring memories. But once you can repair and restore memories, you should be able to create memories.

But they’re not real memories!

Exactly. You can create fake, you know, pseudo memories. And it’s very easy, actually, to create fake memories. There’s a law professor, Elizabeth Loftus, who did pioneering work on inserting false memories into people. She told people they were participating in an advertising study for Disneyland, and showed them an ad with Bugs Bunny and a cardboard cutout of Bugs Bunny. Then she asked whether people had ever met Bugs Bunny in Disneyland, and said, “Tell me about your experience with Bugs Bunny.” And some people said, “I don’t remember that.” But about 20 percent said things like, “Oh, yeah, Bugs Bunny touched my ear and gave me a big hug” — they tell this really elaborate story. But of course that never happened. Bugs Bunny is owned by Warner Bros., not by Disney. He would never have been in Disneyland.

So is it a good thing or a bad thing to pursue?

I ask two questions. One is, how far are we in developing this technology? Usually the technology hasn’t been perfected, but we’re getting there. And the second question is the ethical question: Let’s suppose we could develop it — should we be doing that? The question there becomes more complex, because it depends on the situation. You can imagine that some people have had really traumatic experiences and feel they can’t live anymore, like a soldier with PTSD. If the alternative is that you want to kill yourself, then maybe it’s OK to take a drug, even if it creates false memory.

But in other cases, we have to worry: What would happen if it gets into the wrong hands? One of my jobs is to think ahead and try to map out the ethical arguments: Should we be using these technologies, and under what circumstances?

It seems that once you start going down that road, it would be hard to pull back.

Totally. Once you let the genie out of the bottle, everyone will want to use it. And some people will misuse it.

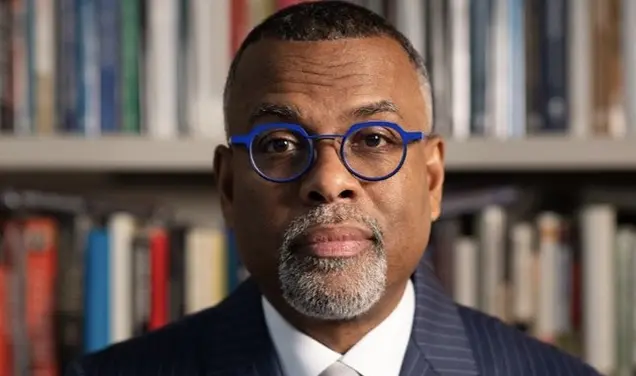

Ethicists are talking about these things, and scientists are in the lab. Do the scientists talk to the ethicists?

We try to talk a lot about it. [Here, Liao noted that he was planning a conference with ethicists and scientists about “borderline consciousness” — using fMRI to determine if patients in a “persistent vegetative state,” or PVS, actually are conscious. He referred to a 2010 study in which scientists concluded that some patients could “answer” yes-no questions because a certain region of the brain lit up.] So that raises the question: Is this patient in PVS conscious? Clinically, by our current definition, these people are completely unresponsive; they don’t have consciousness. They are brain-dead. But by fMRI — and this is disputed territory — they appear to have consciousness.

What does it mean for a family when you find out that your loved one could appear to be able to answer questions? That might affect your decision about end of life. It’s going to affect public policy.

This sounds like mind reading.

It is mind reading.

Do we really want other people to know what we’re thinking?

There are labs where they show subjects clips of movies, and then, on the basis of what’s firing in your cerebral cortex, the scientist can kind of reproduce it. The scientist tries to re-create what the subjects are seeing. And you should see how close it is. If the subject sees a bird, the scientist has a kind of fuzzy picture of a bird. With statistical significance, they can figure out what the subject is thinking, which words they’re thinking about. But the technology is not perfected yet.

They can’t just figure out what you’re thinking without having a lot of data. For example, they get 50 people to look at a cup, and see what happens in the brain when the people see the cup. Then, when you are under a scanner and what’s happening in your brain matches what happens in the other 50 images, they know you are looking at a cup.

Right now we just don’t have the data for many things. You would have to get a bunch of people to look at a lot of things to say you’re reading people’s minds.

Will that always be the case?

Not always. But that’s a big limitation right now.

The other thing that fMRI might be used for is crime detection. That raises questions about whether you’re testifying against yourself, for example — you’re not talking, you’re not testifying, but they could use that image. ... They actually prosecuted a woman in India by using the fMRI. She was accused of lacing her boyfriend’s coffee with arsenic. She was convicted.

There was a case in New Jersey where they tried to get fMRI images to be admitted as evidence. The judge very sensibly said, “We’re not there yet.”

Suppose we could do it — what are the issues?

One worry is privacy. It’s bad enough governments can read our phones; now they could be able to read our minds. Some people are suggesting that maybe we can have mind scanners in airports, so that we scan brain waves to see which people are thinking about certain words: bomb bomb bomb bomb. But think of this hypothetical: The government sometimes engages in torture to extract information. Imagine if you can extract information without torturing the subject. Just put the subject in an fMRI. Wouldn’t that be better?

Do we have to do either one?

Well, if we had to do one, it seems like the more preferable way — you violate the person’s privacy, but at least you don’t torture the person to death.

Did you see the movie Minority Report?

Yes, with the “precogs” [people who foresee crimes before they are committed]. There is a whole literature in neuroethics about whether there really is free will. Scientists have done several experiments. They get you to look at a clock. And as the clock moves, they tell you to flick your wrist. And before you even do it, they can tell when you’re going to do it. They do it on the basis of what’s called the readiness potential — they know you’re going to make a decision a couple of seconds before you’re even aware of your decision.

In another experiment they got subjects to press the left button or the right button. Up to seven seconds before the subjects picked a button, they could figure out whether the subject would pick right or left, depending on the brain signature. So that’s getting very close to Minority Report. That raises questions about free will.

How do the scientists feel about the philosophers?

Scientists I know are so into their research, it’s hard to say, “I’m not going to pursue this because the disadvantages outweigh the advantages.” They sometimes think we’re pests. The initial reaction is that as long as they have gotten it past their institutional review board, they say, we’ve done our ethics. But I think after talking to us, they realize the importance of it. Because science sometimes goes bad. When it turns out that the science has not been done in an ethical way, it actually sets the science back by 10 to 15 years.

There’s a view that technology is inherently neutral. You can use it for good; you can use it for bad. Nuclear energy can be used for good and for bad. If we don’t use that technology, we’ve shut ourselves off from a lot of good things. Or, take this knife. Some people say, “Great — we can cut apples”; other people say, “Let’s stab people.” That issue has been with us ever since we started inventing tools. What neuroethicists are trying to do is avoid doing the bad thing first.

How about DARPA? Talk a little about where DARPA’s neuroscience technology is today.

Deep-brain stimulation exists. That’s where electrodes are implanted in the brain, and the electrodes produce electrical impulses. About 100,000 people today use it for Parkinson’s.

DARPA is very insistent that they only want these technologies for treatment, to treat people and repair the memories of soldiers with PTSD. But it goes back to my point about dual use: Once you have the technology, you don’t have to use it only for treatment. You can actually use it for enhancement for soldiers. And then it can be used for the enhancement of others. It just seems so tempting ...

Take drugs like Ritalin, which is supposed to be for people with attention-deficit disorder. But kids without ADD use it in high school.

What’s your biggest worry?

I think the privacy is one big worry. Suppose you’re on the job, and we know how to erase your memory. Suppose you work for a company with proprietary technology, and they want to make sure you don’t give it away. This is a bit science fiction, but it’s worth asking: Can they, each day, erase whatever you’ve learned, so you can’t divulge secrets?

How far away are we from some of these technologies being part of our lives?

Well, some of them are here already. I think about 10 to 15 years. The deep-brain stimulation is already available. We just need to make a closed-loop system — a system that monitors brain activity itself, just like we have thermostats that monitor room temperature. An implant could show that activity is spiking when something important is taking place in your brain, and someone can figure out what you’re thinking.

The biggest challenge is they have to give you an implant. But, you know, a lot of people are using already these “wearables” to play video games — things that send electrical pulses to your brain to help you play better. I know people who wear them while they’re studying — to give them cognitive enhancement by sending small electrical pulses.

So here are people voluntarily giving Google all kinds of information, voluntarily taking Ritalin, putting wearables on their heads, and giving their 10-year-olds drugs. It’s pretty easy to imagine that people could be convinced to do these things. You tell them that you’ll be better at games; you’ll be better at school. Instead of taking 10 hours to learn math, it will take you two. People will say, “Sign me up!” There can be a pay service and a free service. For the free service, you agree to give us access to your brain, just like you provide access to your email for advertisements. And once they have the information about you, they can figure out things about other people. So even if you didn’t sign up but everybody else did, they can figure you out.

That’s a little unsettling.

It is. But that’s how these technologies will be rolled out and adopted.

Interview conducted and condensed by Marilyn H. Marks *86

No responses yet