PAWcast: David Robinson ’04 Examines Ethics in Algorithms

‘What kind of a decision are we making here? And what are the human impacts of what we’re doing?’

What happens when a donor kidney becomes available to somebody who needs one? In the U.S., a hundred thousand people are waiting on lists, all with different ages, complications, and circumstances. How do you decide who gets it? In his new book, Voices in the Code, David Robinson ’04, a scholar and co-founder of the equity-focused NGO Upturn, takes a look at the algorithm used to match kidneys and patients, and on the latest PAWcast he discusses how it was developed. Algorithms are increasingly used for all kinds of decisions in public life, he says, making close examination of the ethics and morality within them ever more crucial.

Listen on Apple Podcasts • Google Podcasts • Spotify • Soundcloud

TRANSCRIPT:

Brett Tomlinson: Computers play an enormous role in our lives in ways that we might overlook. Depending on where you live or work, an algorithm could be helping to decide whether your children will get a coveted spot in a top public school, or whether a bank will approve your loan to buy a home, or whether you’ll get an interview for that job that you’re seeking. This month on the PAWcast, we talked with David Robinson ’04, whose new book, Voices in the Code, examines how some of these high stakes algorithms are created and makes recommendations for how we can improve that process.

David, thanks for joining me.

David Robinson: Glad to be with you.

BT: I’d like to start with a very basic question because our listeners may have varying levels of tech literacy. What is an algorithm? How, how do you define it?

DR: That’s a really great question actually, Brett, and it’s one that a lot of other people have, including policymakers. And what I would say is that the most useful way of thinking about it is that an algorithm is a set of rules for solving a problem. So you could think of long division that you or I would’ve done by hand in grade school. Technically that’s an algorithm, but usually people only roll out the fancy word when they’re thinking about software, when they’re thinking about rules that happen on a computer. And so what I say in the book is, you know, sure there’s a technical meaning, and it could cover all kinds of rules, but I think it’s important when we’re having these conversations, especially if we want them to be inclusive — these conversations about new technology — I think it’s important to sort of meet people where they are with our language. And so, the way I use the word in the book is the way that I think most news and sort of public sources would use it, which is it’s rules implemented in software.

BT: And in a sense, many of the decisions being made by algorithms today have been made by, I suppose, administrators for years and years under similar sets of rules. But an algorithm may have some benefits: speed of processing, potentially removing human error and maybe some biases or, I suppose, can introduce biases as well. But as you point out, making an algorithm is not a purely technical exercise — that there are values and morals to be considered.

DR: Sure. So you could think of an algorithm as a machine that makes decisions. And so part of the making of the machine involves technical stuff about how machines work, like where does the data come from and how is it processed, and how quickly is it all happening, and stuff like that. But what you also have is, OK, what kind of a decision are we making here? And what are the human impacts of what we’re doing? You used, a moment ago, the example of schools and people signing up for schools. I mean, that’s a beautiful illustration of this, that yes, there’s a technical piece of matching students to schools and, and you don’t want to, for example, assign more students to a school than you have seats in the, in the classroom. But there’s also the question of, you know, who gets their first choice of school? Who ought to get their first choice? What kinds of students should be put together in the same school? Do we think about it as, individually one student at a time, just giving the best option to each individual applicant? Or are we trying to think about the whole cohort and build a diverse class? Because that would be a very different set of rules, right?

On one, in one case, you could decide one person at a time, but in the other you would have to look at the whole school together in order to, as I understand, you know, Princeton and other places, explain what happens — you’re, you know, you’re looking at a whole community that you’re building. And that’s kind of an ethical decision in one way — not kind of, it is an ethical decision, one way or the other. And yet, whoever writes the software, they’re going to be implementing whichever ethical way of thinking about it, one way or the other, is actually going to happen. And, you know, maybe someone tells them how to navigate those ethics, or maybe nobody does, in which case, they’re going to have to figure it out.

BT: And a big part of what comes through in your book is the role of stakeholders. The examples that you give are high stakes algorithms, and different communities have different views of how it should work. Why is it so important to incorporate stakeholders, you know, early in the process when you’re creating these algorithms?

DR: Well, I think that for all the same reasons that we think democracy is important in general, and we think people should have a voice in the rules that we all live under, basically anybody in general, anybody who has to live under some set of rules ought to have some voice in setting up what those rules are.

And maybe there are exceptions. There are, you know, exceptions about children or people who are temporarily part of a community or people who maybe have profound disabilities of various kinds. But in general, everyone ought to have a voice, is how we usually think about it. And you might say, “Look, if government uses software, then everyone had a voice because we all elected our elected leaders, after all.” Then if they decide that, you know, some piece of software should decide who goes to jail, if we don’t like what they do, we should just elect different leaders using our existing democratic process. And so the idea of having a specific way that we do democracy for algorithms seems like maybe it might not be needed.

That’s a fine intuition to start with. But actually, as we look at what actually happens when software gets used to make some of these ethically charged decisions, what we’re seeing is that our existing old style democracy approaches, like electing our leaders, doesn’t always get us all the way to where we would want to go in terms of having the software reflect shared values.

So, I used to work a lot on criminal justice issues, and in courtrooms, sometimes software gets brought in that’s going to judge people, and maybe the court officials don’t fully understand. The attorneys don’t fully understand the ethical choices that are embedded in the software, as in principle they should. Or in this case, let’s say with schools, there’s been a huge controversy in New York City about how the public school, high school seats are assigned. And recently actually, the prior mayor, Bill de Blasio, directed that the algorithms for assigning students to schools should finally be made public, which they hadn’t been before. So there really are some frontiers of figuring out how in practice do we do democracy when it comes to these new technologies.

BT: And I suppose there are lots of examples when these things go wrong or have unintended consequences, but in your book, you focus on one high stakes example that in general, I think you would say, has gone right: the allocation of kidneys, the organ transplant system specifically for kidneys. And I have to confess, before reading the book, I had never given much thought to how complicated that really is logistically and ethically. Can you just give sort of an overview of what are some of the competing viewpoints or approaches in allocating kidneys?

DR: Sure. So, and maybe before we even get to what are the different opinions, let’s just do the mechanics for a second of how does this thing actually end up saving people’s lives? So, if you think about transplants, people often talk about a waiting list. But it’s actually not a list, like a first come, first served, take-a-number kind of thing. It’s a matching process because there are a lot of different factors that play into it.

Like when a donated kidney becomes available, let’s say that, you know, someone is an organ donor, unfortunately, they’re dying, but they want to save someone else, but they didn’t pick someone to save. So we’ve got to figure out where’s this organ going to go? And there are a lot of different factors that matter. You know, there are issues of having the right blood type and the compatible immune system to benefit from an organ. There are questions about who’s nearby and the logistics even of moving an organ from one place or moving a patient from one place to another.

Once the organ becomes available, what this software does is it looks at every potential recipient and says, here’s a prioritized list, rank order list, of patients to offer this particular organ to. And then, they work their way down the list until someone says, yes, I’ll take that organ. And actually, these offers go out not to the patients themselves, but to their medical teams. So there’s definitely a level of human judgment that comes in between the algorithm and actually getting into the operating room. But there’s also this central piece of this that’s automatic, where depending on how that algorithm works, we’re going to get different outcomes about who gets this lifesaving treatment.

So there are the medical and the logistical factors, but there’s also a moral piece. Like, OK, we have one organ, there are a hundred thousand people waiting in the United States for kidneys, approximately. We could give the organ to whoever would benefit the most, in terms of how long it would extend their life. And then we would, if we did that every time, we would maximize the total amount of life years saved from transplant. That was one idea about how to rewrite the kidney algorithm. And my book focuses on this debate between about 2004 and 2014, where they were rewriting the system. And one school of thought was, we should totally maximize the benefits from this scarce supply of organs.

But the other side pointed out that that wouldn’t be very fair for a number of reasons. For one thing, it would really strongly favor younger recipients. So if you were older and you came to need a kidney, you might be locked out because you know, your life couldn’t be extended the most. And there were also race equity problems with this. It would be inequitable insofar as the burden of kidney disease is concentrated in communities of color, among African American and other non-white American patients, partly because of the social determinants of health. So, things that tend to lead to one needing a kidney include things like diabetes and high blood pressure and poor access to prior medical care that, through no fault of their own, are much more common in certain communities than in others. And so, you know, we would basically be punishing people for having lacked access to care in the past if we said, we’re going to maximize the total benefit from each organ sequentially.

And so, what ended up happening in the end was a compromise where the total maximizing of benefit was rejected as unfair. But what they said was, well, we can give the, the youngest and healthiest among all of the organs to the youngest and healthiest among all of the recipients. And we can do that without just giving organs to young and healthy people. It’s remapping which organs go where.

BT: And a lot of the, the book is about the process of revamping this algorithm. So in terms of stakeholder participation and transparency and consensus building, it sounds like this was a real success story, but it also took 10 years, which feels like an eternity. So, what can we learn from this example? And how can we apply it in, in other places, particularly these sort of high stakes algorithms that involve public goods?

DR: Yeah, I mean, I think when I first was getting into this, you know, and I didn’t really know anything about medicine as such, either, I came at it because I’d seen things go wrong in courtrooms and welfare offices. And I thought, there’s got to be a better way. Actually, I was teaching a class at Georgetown —this was back when I worked in public policy and lived in Washington — I was teaching a class at Georgetown about governing algorithms and how to make the moral choices. And a couple of my students identified the organ system as an interesting example where they seemed to be pretty careful about this. And when I saw this term paper, I thought, this is so great. Here’s a useful real example. I mean, so many people have theories about how to make algorithms in a more ethical way. There’s lots of blue-sky thinking. There are many ideas on the whiteboard, but there are few concrete examples. And here is one.

I wanted my students actually to write a paper about it, but they were both of them about to go off and become practicing attorneys. And so it sort of sat there as this unexplored idea. And then, a couple years later, I ended up actually leaving Washington, moving to Cornell just to pursue this idea and build what became this book. Because I think it is a really useful example.

And when I first started out, I sort of thought, oh, we need public input into the design of these systems. And even that word input is kind of a technical word, it implies some kind of mechanical process, you know, like, I don’t know — whether it’s some kind of ore that would go in, you mine it somewhere and then use it to make steel or something like that.

But in fact, this whole process was very human and very messy and very sort of squishy. I mean, for one thing, people’s opinions were not like some kind of static, some ore that you get out of the ground. People’s opinions changed over time as they debated with each other, and they gradually built a shared sense of what was possible and of what would be fair or at least would be better than the system that they had had before. And that kind of gradual consensus process is much more organic than it might look — than an algorithm might look on paper.

One analogy that kind of brought things into focus for me was like those rock tumbling things for jewelry and stuff, they’ll polish these rocks by tumbling them all together until the hard edges are worn away, and you end up with something that maybe sparkles a little bit more and is a little bit sort of worn and a little bit softer. That’s what it, that’s what it was like. And it took a long time.

So I think one thing that it reminds us, this whole story reminds us, is that democracy is messy. And just because we’re doing democracy right, doesn’t mean you’re necessarily going to get everything that you want. In fact, nobody got everything that they wanted. What we all got was a mutually tolerable compromise. But I think in today’s environment, getting even to that point is no small feat.

BT: No matter how you set the rules, there have to be some boundaries, which can seem sort of arbitrary, and you encountered a sort of boundary question that had to do with decimal points. Can you explain that situation?

DR: I got this call, near when I was finishing the book, from several of the data scientists who work at the United Network for Organ Sharing, which is the federally designated nonprofit that actually runs this allocation process and runs the design process for the algorithm. And what they were asking was, how many decimal places should we use when we’re calculating people’s allocation scores?

And at first I thought, that’s a really technical question. Why would you — shouldn’t the experts decide how many decimal places to use? But what eventually became clear as I was talking to them was, this is actually a moral decision, and here’s why: They could use all the data that they have, and they could calculate these scores out to the umpteenth decimal place for each patient. And so maybe some tiny difference in the 14th decimal place between your allocation score and mine, if we both needed a transplant, would determine which of us would get the organ. But at that point, the difference in our scores doesn’t actually describe a real medical difference between our situations. If the scores are that close, then we’re basically both equally ready to benefit from a transplant. And the tiny numeric difference between our scores is basically being used as an excuse or a pretext to give the organ to one person and not another.

Now, sometimes maybe we do need an arbitrary reason to decide that one person gets an organ and another doesn’t. After all, we only have the one organ to give out in that moment. We can’t give it to both people. And maybe we need to flip a coin or something like that. But what the data scientists were saying was, we shouldn’t pretend that the data really tell us which patient to give this organ to. If there’s not a clinically meaningful difference in the data, and if what we really need to do is to flip a coin, we should be honest about that, which I thought was a really impressive moment of moral humility on the part of these experts.

BT: So organ donation is a big public system. Do the same concepts apply to developing algorithms for private organizations when the algorithm is going to affect an entire community, say a university or a large company?

DR: I would imagine that the answers to that question are going to depend — I know “it depends” is probably not a satisfying answer, but different organizations, different contexts, have different cultures and different rules that apply to them. So for example, one of the questions I got asked recently was, what about the admissions algorithms for a university? And it so happened that the person who posed this question was a student at a public university. And it occurs to me that if we’re talking about a taxpayer funded public institution, there might be a strong rationale for being explicit and being public about how we give out those valuable and scarce university seats. Whereas if the institution is a place like, you know, Princeton or maybe even like a theological seminary or other private institution, it might be that there’s not the same rationale for making things public. And, you know, similarly, it’s a frontier, certainly in the commercial domain and in terms of regulation and thinking about that. And I think we’re sort of just starting to work through those questions.

BT: We’ve talked a great deal about the work that you’ve been doing, but can you tell me a bit about your path to this work and what role Princeton may have played both in your experience as a student and also, I know that you worked at the University at the Center for Information Technology Policy. What role did those experiences play in your path to the work you do today?

DR: Well, I wouldn’t be here if I hadn’t been there, that’s for sure. I’ve always been interested in the human impact of technology, and partly that comes from my own life, because as a child — I have a mild case of cerebral palsy. I mean, I had it then, I have it now. And my handwriting was really wobbly. When I was little, they gave me a word processor to use in school. This was in the, I guess, early 1990s. And it changed my life because instead of being a bad writer who produced an illegible scrawl, I could type, I could move text around after the fact. I had all of these — not only that did this compensate for my original disability, but it put me way out ahead of where most people, or I, would’ve been with pen and paper, if I hadn’t needed the computer in the first place because there was so much more. And then of course, it came out of the printer and had this sort of subtle imprimatur of authority that typewritten documents have over handwritten documents.

So anyway, one of the things that I noticed about this was that it wasn’t a brand new technology. The thing that changed my life wasn’t a lab invention. There had been word processors for years before. What changed was the rules, right? The policy changed and got to be where I could bring a computer into the classroom with me. And that change was a change in the rules that really happened at a human level. And so I think all through my life, I’ve been fascinated by the rules that surround technology and how we decide when and how we’re going to use it. And I carried that fascination with me into undergrad. I took a seminar on public policy and technology with Professor Ed Felten in the computer science department as an undergrad.

I was in Oxford for a couple of years immediately after Princeton, but then came back to become a staff member, the associate director of the new, then new, Center for Information Technology Policy and help Ed to set up this research center, which was in what we then called the Wilson School together with the computer science department, so public policy and computer science. It really was trying to get at these values questions or these, let’s call them maybe mixed questions, where in order to do it right, you need both, you need some values or philosophy piece, and also some deep knowledge of how the technology is built and how it could be made differently. And so we got into questions around open government data. We did an academic paper that led to, helped to spark data.gov, which was this sort of effort to make federal information more transparent, easier to reuse, partly by publishing it in ways that people could throw onto a map or analyze.

And then later I, together with a colleague from the lab, Harlan Yu, who was finishing up at that time, his Ph.D. in computer science. I went off to law school, I was getting my law degree, and he was getting his doctorate from Princeton. He’d been a student of Ed’s. And we sort of banded together and thought we could really combine these areas of expertise and make it easier for, in particular as it turned out, for public interest advocates to engage on these issues. So if you were — I remember one of our early mottos was, you know the issues, but technology is changing what’s possible.

So, if somebody was a criminal justice reform person or a health care advocate or something like that, they probably hadn’t gotten to where they were by being a computer nerd. But now, because of the increasing adoption of algorithms in these high stakes settings, it was becoming necessary for them to really engage on the details of how these systems were built. And that required some resourcing that was bringing in the computer science expertise, the engineering expertise, into the values conversation.

And so that’s what Upturn, the NGO that Harlan and I founded together — that’s what we did. And I’m very, very proud to say that Upturn continues to do that now. Harlan is directing it, but it’s a much larger team than the two of us in the living room that it began as. And it’s of course a thrill to see something continue and thrive after one moves on from it.

BT: And can you tell me a bit about what you’re doing now, where you are?

DR: So I’m a visiting scholar at UC Berkeley, there’s an algorithmic fairness and opacity group at Berkeley that I’m part of, and am also a faculty member at Apple University, which is the internal learning and development group for Apple employees. And one of the ways that Steve Jobs used to explain what was distinctive about Apple is he said, we’re at the intersection of technology and the liberal arts. And so there’s a real belief in integrating many different kinds of expertise, engineering, but also aesthetics, design, famously Jobs had a strong interest in calligraphy, and of course human values in all kinds of ways. And so, what I’m doing here, I hadn’t thought of it this way, but now that you ask, I guess really continues that tradition of bringing together technical with more human kinds of expertise, and really helping create space for colleagues here at Apple to reflect on some of these deep questions.

BT: Well, it certainly sounds like there is much interesting work ahead. David, thank you again for joining me.

DR: Of course. Always a pleasure.

PAWcast is a monthly interview podcast produced by the Princeton Alumni Weekly. If you enjoyed this episode, please subscribe. You can find us on Apple Podcasts, Google Podcasts, Spotify, and Soundcloud. You can read transcripts of every episode on our website, paw.princeton.edu. Music for this podcast is licensed from Universal Production Music.

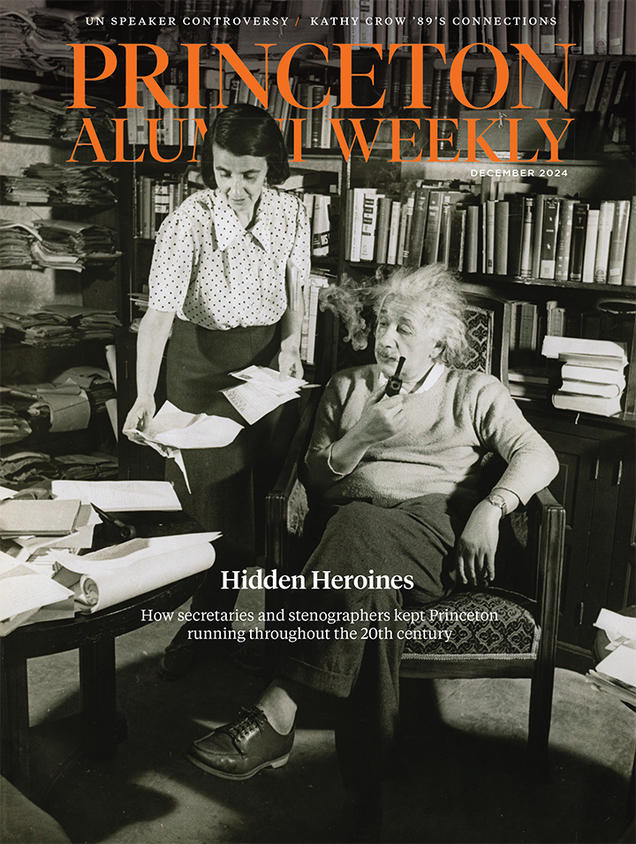

Paw in print

December 2024

Hidden heroines; U.N. speaker controversy; Kathy Crow ’89’s connections