Computer Science: Troubling Online Shopping Habits? Vendors’ Practices Might Be to Blame

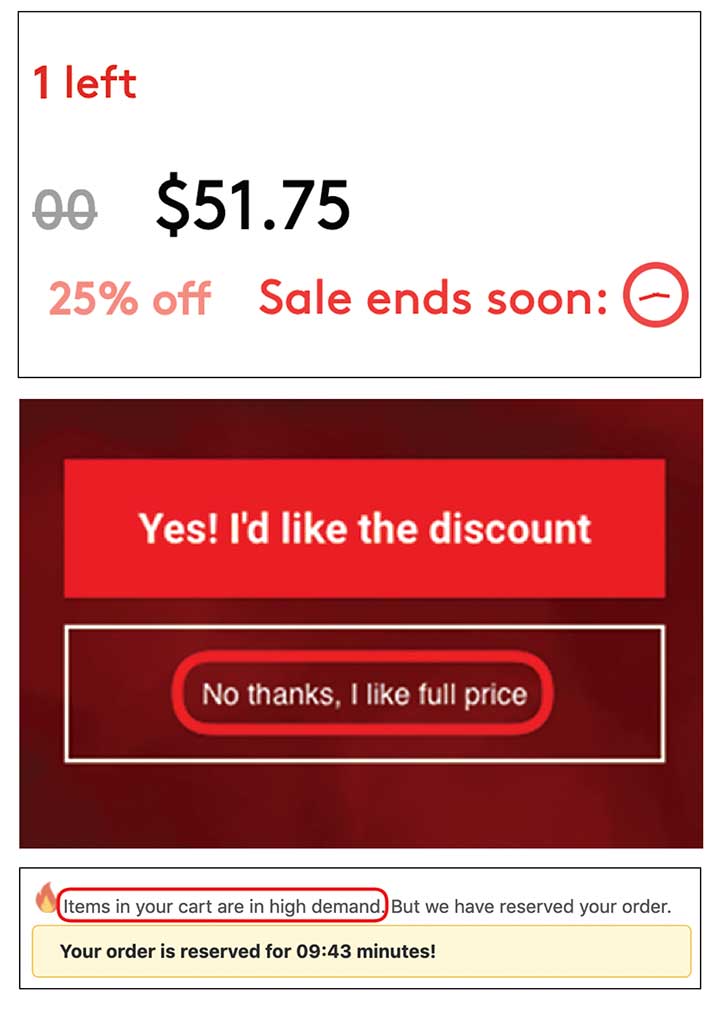

“Dark patterns” may sound like something from The Matrix, but if you shop online you’ve probably seen countless: timers counting down to the end of a sale, messages that supplies are limited, fees that sneak into your shopping cart.

Dark patterns — a term coined by British web designer Harry Brignull — that nudge, trick, or force consumers into purchases date back to the advent of online shopping. In June, a team of researchers from Princeton and the University of Chicago were the first to catalog how hundreds of e-commerce sites use these methods. The researchers hope their catalog of nearly 2,000 different dark patterns on more than 1,300 different sites will pressure companies to reconsider their practices, and spur regulation.

Members of Princeton’s Web Transparency & Accountability Project (WebTAP) used automated web-crawling programs to assemble a list of the dark patterns the programs could see in a page’s text. Then they classified the dark patterns’ methods systematically.

Some patterns deceive users, say, about how many items are left in stock, and some restrict their choices — for example, not allowing customers to create an account without giving access to their Facebook account (and its valuable data). Others rely on exploiting foibles of our psychology: “Urgency” patterns, for instance, exploit our “scarcity” bias, making offers look more valuable by making them seem rare, whether through the use of a digital countdown timer or the age-old strategy of a limited-time offer. “Sneaking” patterns add products, fees, or subscriptions into customers’ carts without alerting them until just before the sale, while “confirmshaming” menus present decisions to be made between the website’s favored choice and an option like “No thanks, I hate saving money.”

The project “was a good fit for WebTAP,” says postdoc Gunes Acar, who co-authored the study. Since the team had previously created automated web-browsing programs to survey websites’ privacy and tracking practices, they needed only to tweak these “crawlers” to look for dark patterns.

According to Arunesh Mathur, a third-year computer science Ph.D. student who was first author on the study, more research would be helpful, especially on platforms such as mobile apps or smart TVs.

Some dark patterns are probably illegal, says Jonathan Mayer ’09, assistant professor of computer science and public affairs. “The Federal Trade Commission Act prohibits deceptive business practices,” he says, as do many state laws. Still, many sites display deceptive messages about limited stock or high demand.

A bipartisan bill called the DETOUR Act, aimed at regulating dark patterns, would ban “sneaking” practices, among others, says Mayer. But the question of where the line should be between legal marketing and unacceptable manipulation remains open, he says: “Drawing that line is one of the top consumer-protection challenges today — it will involve law, policy, social science, and computer science.”

No responses yet